Content Types

All Content Types

Blog

Client Stories

Expert Insights

Reports & Whitepapers

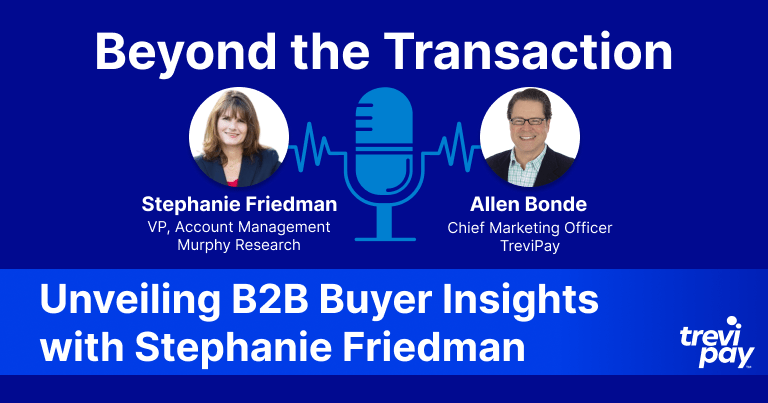

Podcast

Videos

Webinars

Pain Points

All Pain Points

Cash Flow & Working Capital

Customer Experience and Loyalty

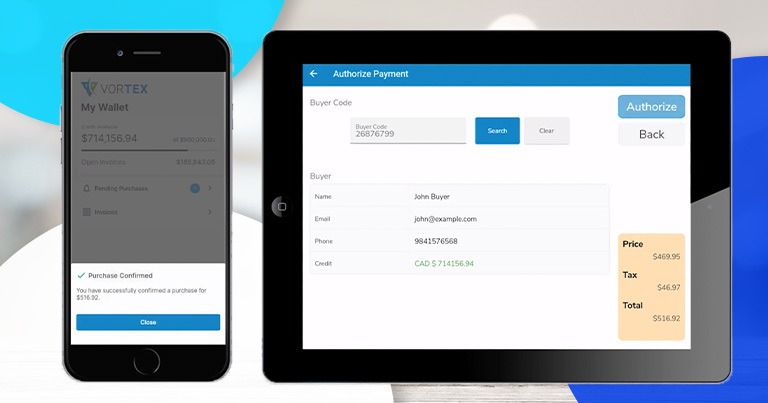

B2B eCommerce

B2B Payments & A/R

Digital Transformation

Fraud & Risk Management

Global Expansion

Geography

All Geography

Americas

EMEA

APAC

Industries

All Industries

Corporate Travel

Manufacturing

Retail